一、 简介

1.1 k8s 是什么

Kubernetes(通常被简称为K8s)是一个开源的容器编排和管理平台,用于自动化容器化应用程序的部署、扩展和操作。它由Google开发并捐赠给了云原生计算基金会(CNCF)。Kubernetes旨在解决容器化应用程序的管理和部署问题,使开发人员能够更轻松地构建、交付和运行容器化应用。

1.2 k8s 的优势

Kubernetes 是一个开源的容器编排平台,它提供了一种管理容器化应用程序的强大方式。以下是 Kubernetes 的一些优势:

- 自动化和自我修复: Kubernetes 可以自动监测应用程序状态并进行自我修复,确保应用持续稳定运行。如果某个容器或节点发生故障,Kubernetes 会自动重新启动容器或迁移工作负载。

- 弹性扩展: Kubernetes 允许根据需要扩展或缩减应用程序实例的数量。这种自动扩展能力可根据负载自动调整应用的规模,以满足流量需求,同时在需求减少时节约资源。

- 负载均衡: Kubernetes 支持内置的负载均衡,可以将传入的流量分发到不同的容器实例中,确保应用程序能够有效地处理高流量。

- 自动部署和滚动更新: 通过声明式的配置,Kubernetes 允许你轻松地进行应用程序的部署和更新。滚动更新功能可以逐步替换旧版本的容器,从而减少应用程序的停机时间。

- 多环境支持: Kubernetes 支持多个环境(例如开发、测试、生产)之间的轻松迁移,确保应用在不同阶段的一致性。

- 声明式配置: 你可以使用 Kubernetes 的 YAML 文件来定义应用程序的配置和部署要求。这种声明式的方法让你能够更清晰地定义应用程序状态,而 Kubernetes 负责将系统状态调整为所需状态。

- 自定义资源和扩展性: Kubernetes 允许你创建自定义资源和控制器,从而可以扩展平台的功能,满足特定应用程序或业务的需求。

- 多种容器运行时支持: Kubernetes 支持多种容器运行时,如 Docker、Containerd 等,使你可以选择最适合你的应用的运行时环境。

- 社区支持和生态系统: Kubernetes 拥有庞大的社区和丰富的生态系统,有大量的工具、插件和服务,可以帮助你更好地管理、监控和扩展你的应用程序。

- 跨云和本地环境: Kubernetes 可以在不同的云平台和本地环境中运行,使你能够在不同的基础架构上保持一致的部署和管理体验。

总之,Kubernetes 提供了强大的容器编排和管理功能,帮助开发人员和运维团队更有效地部署、扩展和管理容器化应用程序。它的优势在于自动化、弹性、灵活性以及丰富的生态系统。

1.3 k8s 组件介绍

Kubernetes(通常简称为K8s)由多个组件组成,这些组件共同协作以管理容器化应用程序的生命周期。以下是常见的 Kubernetes 组件及其功能介绍:

- Kubelet: 运行在每个节点上的代理,负责管理容器的生命周期。它与 Master 节点通信,确保容器在节点上按照所需的状态运行。

- Kube-Proxy: 也运行在每个节点上,维护网络规则以实现 Service 暴露和负载均衡。它根据 Service 配置更新节点上的 iptables 规则。

- Kube-Scheduler: 负责监视新创建的 Pod,并根据各种条件(如资源需求、亲和性、亲和性等)将其调度到集群中的适当节点上。

- Kube-Controller-Manager: 包含多个控制器,用于监控集群状态并根据需要进行自动修复。其中一些控制器包括:

- Replication Controller 和 ReplicaSet 控制器: 确保在集群中运行指定数量的 Pod 副本。

- Deployment 控制器: 管理应用程序的滚动更新和版本控制。

- Namespace 控制器: 管理命名空间的创建、更新和删除。

- Etcd: 是一个分布式键值存储系统,用于存储集群的配置信息、状态和元数据。Kubernetes 使用 Etcd 来存储所有关键信息,包括配置、部署和服务发现。

- API Server: 提供了 Kubernetes 集群的 API,允许用户和其他组件与集群进行交互。所有的资源和操作都通过 API Server 进行访问和管理。

- Container Runtime: 负责运行容器,常见的容器运行时包括 Docker、Containerd 等。

- Controller Manager: 包含一系列控制器,这些控制器可以监控集群中的资源状态,并确保所需状态与实际状态保持一致。

- Cloud Controller Manager: 在云平台上运行,用于集成 Kubernetes 集群与特定云提供商的功能,如自动扩展、负载均衡等。

- Admission Controllers: 用于拦截和修改进入 Kubernetes 集群的请求。这些控制器可以执行验证、默认值设置和修改请求,以确保遵循集群策略。

- Ingress Controller: 管理 Ingress 资源,将外部流量引导到集群内部的服务。

- Pods: 是 Kubernetes 的最小调度单位,可以包含一个或多个容器。它们可以共享网络和存储,形成一个逻辑单元。

- Services: 用于定义一组 Pod 的访问方式,提供负载均衡和服务发现功能,使应用程序能够在不同的 Pod 之间进行通信。

- ConfigMaps 和 Secrets: 用于将配置和机密信息与应用程序分开,并在容器中以环境变量或卷的形式提供。

这些组件一起协同工作,使 Kubernetes 能够提供强大的容器编排和管理功能。每个组件都扮演着不同的角色,确保容器化应用程序能够以高可用性、自动化和弹性的方式运行。

二、k8s 安装部署(kubeadm)

注意:本文安装的 k8s 版本为

v1.17.11,不能直接通过 kubeadm 执行升级操作。如果需要进行升级操作,需要满足version >= 1.18.0

2.1 安装方式

Kubernetes 的安装可以分为多种方式,根据你的需求和环境选择合适的安装方式。以下是一些常见的 Kubernetes 安装方式:

Minikube: 如果你想在本地开发环境中快速搭建一个单节点的 Kubernetes 集群,可以使用 Minikube。Minikube 创建一个虚拟机,并在其中运行一个单节点的 Kubernetes 集群。适合学习和开发目的。

Kubeadm: Kubeadm 是一个官方维护的工具,用于在生产环境中快速部署 Kubernetes 集群。它可以在多个节点上设置一个高度可配置的集群,适用于较小规模的生产环境。

Kubespray(原先叫Kargo): Kubespray 是一个开源项目,用于部署高度定制化的 Kubernetes 集群。它支持多种操作系统和云平台,并且可以根据配置要求进行定制化部署。

Managed Kubernetes Services: 主要云提供商(如AWS、Google Cloud、Azure)提供托管的 Kubernetes 服务,如Amazon EKS、Google Kubernetes Engine(GKE)、Azure Kubernetes Service(AKS)。这些服务会为你自动管理集群的维护、升级和可用性。

Rancher: Rancher 是一个开源的容器管理平台,可以帮助你在不同的基础设施上轻松部署和管理 Kubernetes 集群。

K3s: K3s 是一个轻量级的 Kubernetes 发行版,旨在为资源受限的环境(如边缘计算)提供更轻便的安装和管理。

自定义安装脚本: 你可以根据 Kubernetes 的官方文档,在自己的环境中编写自定义的安装脚本。这样可以更好地适应特定需求和架构。

对于初学者来说,Minikube 和 Managed Kubernetes Services 是较为简单的入门方式。而对于生产环境,Kubeadm、Kubespray 和 Rancher 提供了更大的灵活性和控制权。在选择安装方式时,考虑到你的技术水平、部署规模和所在的基础设施环境是很重要的。无论选择哪种方式,确保参考官方文档和最佳实践来确保安装的正确性和可靠性。

2.2 部署过程

安装前准备:

禁用 swap 分区

关闭 selinux

关闭 iptables、NetworkManager 服务

同步服务器时间

# ntpdate ntp.aliyun.com # hwclock -w优化内核参数并修改资源限制

[root@centos7 ~]# cat /etc/sysctl.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-arptables = 1 net.ipv4.tcp_tw_reuse = 0 net.ipv4.ip_nonlocal_bind = 1 net.core.somaxconn = 32768 net.netfilter.nf_conntrack_max = 1000000 vm.swappiness = 0 vm.max_map_count = 655360 fs.file-max = 655360 [root@centos7 ~]# cat /etc/security/limits.conf #<domain> <type> <item> <value> * soft core unlimited * hard core unlimited * soft nproc 1000000 * hard nproc 1000000 * soft nofile 1000000 * hard nofile 1000000 * soft memlock 24800 * hard memlock 24800 * soft msgqueue 8192000 * hard msgqueue 8192000 root soft core unlimited root hard core unlimited root soft nproc 1000000 root hard nproc 1000000 root soft nofile 1000000 root hard nofile 1000000 root soft memlock 24800 root hard memlock 24800 root soft msgqueue 8192000 root hard msgqueue 8192000

2.2.1 具体步骤

- 基础环境准备

- 部署 harbor 和 haproxy 高可用反向代理,实现控制节点 API 的高可用

- 在所有 master 节点安装指定的 kubeadm、kubelet、kubectl、docker

- 在所有 node 节点按爪给你指定版本的 kubeadm、docker、kubelet(可选)

- 在 master 节点运行 kubeadm ini 初始化命令创建集群

- 验证 master 节点状态

- 在 node 节点使用 kubeadm 命令将自身加入集群

- 验证 ndoe 节点状态

- 创建 pod 并测试网络是否正常

- 部署 dashboard

- 集群升级

2.2.2 部署环境

如果是虚拟化环境,最好每个重要节点做好快照

**操作系统:**Centos 7.8

**安装方式:**最小化安装

**部署方式:**VMware 16 Pro

| 角色 | 主机名 | IP | 配置 |

|---|---|---|---|

| k8s-master-01 | master-01.example.local | 10.0.0.11 | 2U 2G |

| k8s-master-02 | master-02.example.local | 10.0.0.12 | 2U 2G |

| k8s-master-03 | master-03.example.local | 10.0.0.13 | 2U 2G |

| ha-01 | ha-01.example.local | 10.0.0.14 | 1U 2G |

| ha-02 | ha-02.example.local | 10.0.0.15 | 1U 2G |

| harbor | harbor.example.local | 10.0.0.16 | 2U 4G |

| node-01 | node-01.example.local | 10.0.0.17 | 1U 2G |

| node-02 | node-02.example.local | 10.0.0.18 | 1U 2G |

| node-03 | node-03.example.local | 10.0.0.19 | 1U 2G |

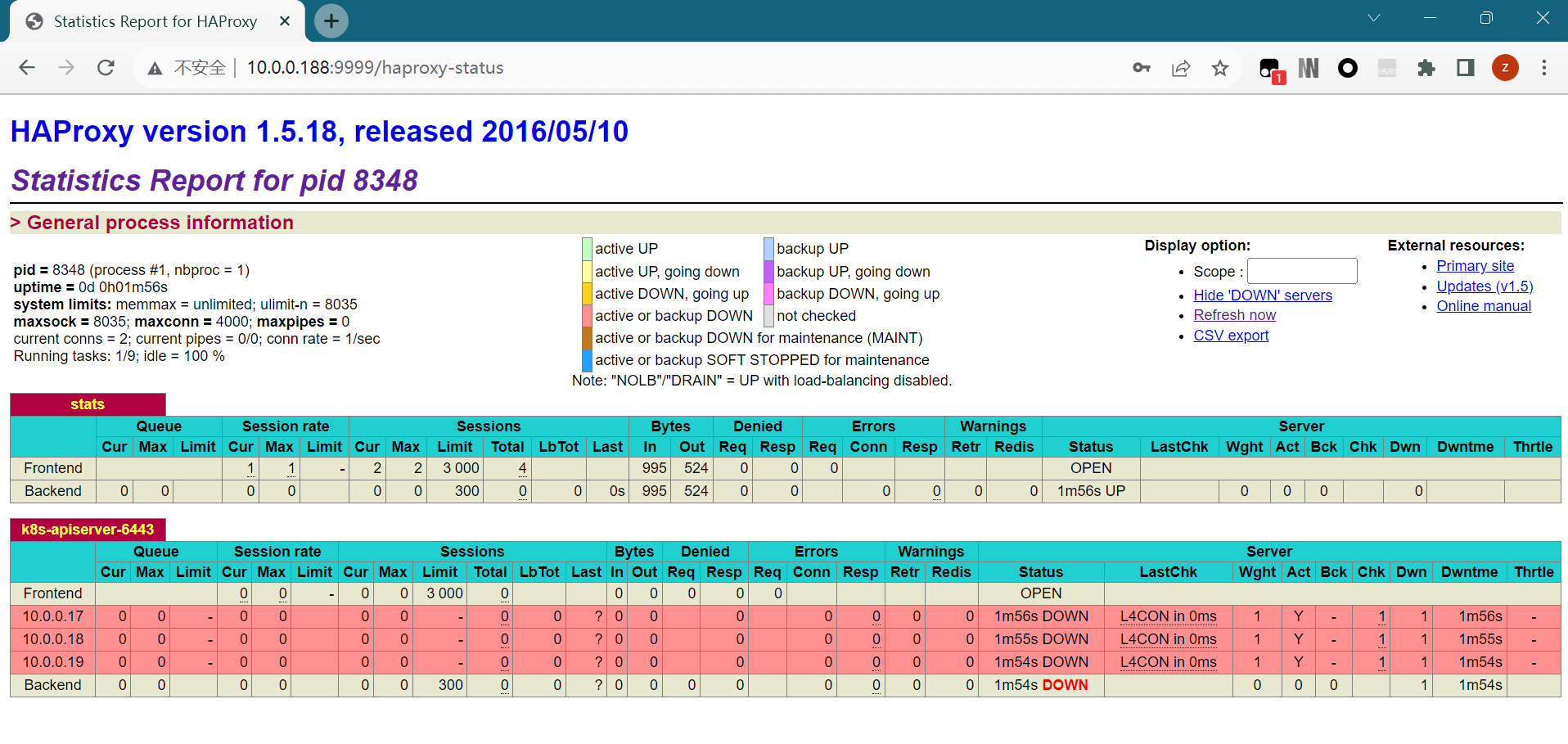

2.3 部署高可用反向代理

基于 Keepalived + haprox实现高可用反向代理,实现 k8s apiserver 服务的高可用

ha-01:

yum install -y keepalived

yum install -y haproxy

cp /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

[root@ha-01 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance k8s {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.188 label eth0:1

10.0.0.199 label eth0:2

}

}

[root@ha-01 ~]# tail -n 60 /etc/haproxy/haproxy.cfg

...

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth admin:passwd

listen k8s-apiserver-6443

bind 10.0.0.188:6443

mode tcp

balance roundrobin

server 10.0.0.11 10.0.0.11:6443 check inter 3s fall 3 rise 5

server 10.0.0.12 10.0.0.12:6443 check inter 3s fall 3 rise 5

server 10.0.0.13 10.0.0.13:6443 check inter 3s fall 3 rise 5

listen k8s-node-80

bind 10.0.0.199:80

mode tcp

balance roundrobin

server 10.0.0.17 10.0.0.17:30004 check inter 3s fall 3 rise 5

server 10.0.0.18 10.0.0.18:30004 check inter 3s fall 3 rise 5

server 10.0.0.19 10.0.0.19:30004 check inter 3s fall 3 rise 5

[root@ha-01 ~]# systemctl enable --now keepalived.service

[root@ha-01 ~]# systemctl enable --now haproxy.service

ha2:

yum install -y keepalived

yum install -y haproxy

cp /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

[root@ha-01 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance k8s {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.188 label eth0:1

10.0.0.199 label eth0:2

}

}

[root@ha-02 ~]# tail -n 60 /etc/haproxy/haproxy.cfg

...

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth admin:passwd

listen k8s-apiserver-6443

bind 10.0.0.188:6443

mode tcp

balance roundrobin

server 10.0.0.11 10.0.0.11:6443 check inter 3s fall 3 rise 5

server 10.0.0.12 10.0.0.12:6443 check inter 3s fall 3 rise 5

server 10.0.0.13 10.0.0.13:6443 check inter 3s fall 3 rise 5

listen k8s-node-80

bind 10.0.0.199:80

mode tcp

balance roundrobin

server 10.0.0.17 10.0.0.17:30004 check inter 3s fall 3 rise 5

server 10.0.0.18 10.0.0.18:30004 check inter 3s fall 3 rise 5

server 10.0.0.19 10.0.0.19:30004 check inter 3s fall 3 rise 5

[root@ha-02 ~]# systemctl enable --now keepalived.service

[root@ha-02 ~]# systemctl enable --now haproxy.service

# vip 在 ha-01 节点

[root@ha-01 ~]# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.188 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:0c:29:cd:4b:70 txqueuelen 1000 (Ethernet)

[root@ha-01 ~]# ifconfig eth0:2

eth0:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.199 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:0c:29:cd:4b:70 txqueuelen 1000 (Ethernet)

浏览器查看状态页

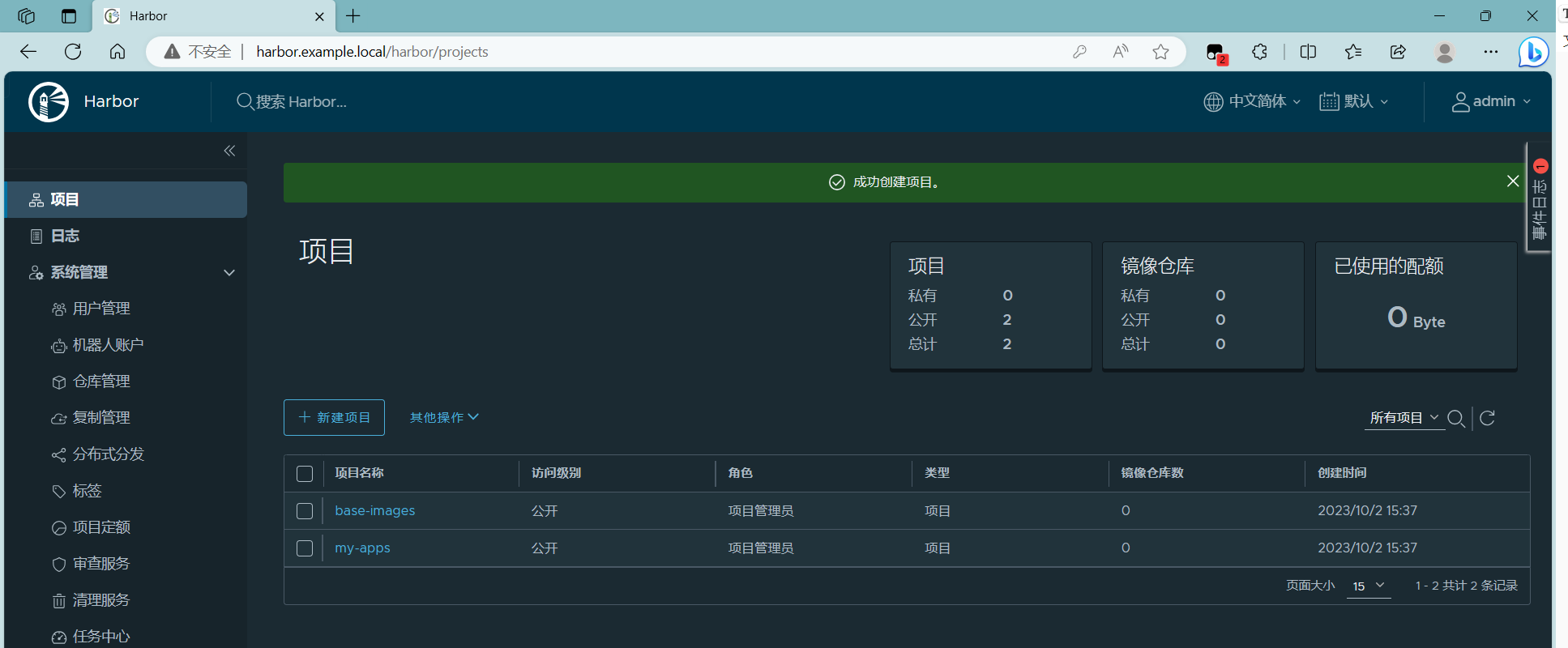

2.4 部署 harbor

https://goharbor.io/docs/2.9.0/install-config/installation-prereqs/

https://docs.docker.com/engine/install/centos/

sudo yum remove -y docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

# 安装 docker

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

sudo yum install -y docker-ce docker-ce-cli containerd.io docker-compose-plugin

systemctl enable --now docker

[root@harbor harbor]# docker -v

Docker version 24.0.6, build ed223bc

# 下载并安装 harbor

wget https://github.com/goharbor/harbor/releases/download/v2.8.4/harbor-offline-installer-v2.8.4.tgz

tar xf harbor-offline-installer-v2.8.4.tgz

cd harbor

[root@harbor harbor]# cp harbor.yml.tmpl harbor.yml

# 关闭 https

[root@harbor harbor]# grep -A 7 "https related config" harbor.yml

# https related config

#https:

# # https port for harbor, default is 443

# port: 443

# # The path of cert and key files for nginx

# certificate: /your/certificate/path

# private_key: /your/private/key/path

# 修改 hostname

[root@harbor harbor]# grep -m 1 hostname: harbor.yml

hostname: harbor.example.local

# 修改密码

[root@harbor harbor]# grep -m 1 passwd harbor.yml

harbor_admin_password: passwd

# 开始安装

./install.sh

# docker-compose 安装路径

[root@harbor harbor]# rpm -ql docker-compose-plugin |grep docker-compose

/usr/libexec/docker/cli-plugins/docker-compose

登录 harbor 并创建项目

注意: 自行在 window 主机添加 hosts 解析 10.0.0.16 harbor.example.local

2.5 所有节点安装 docker

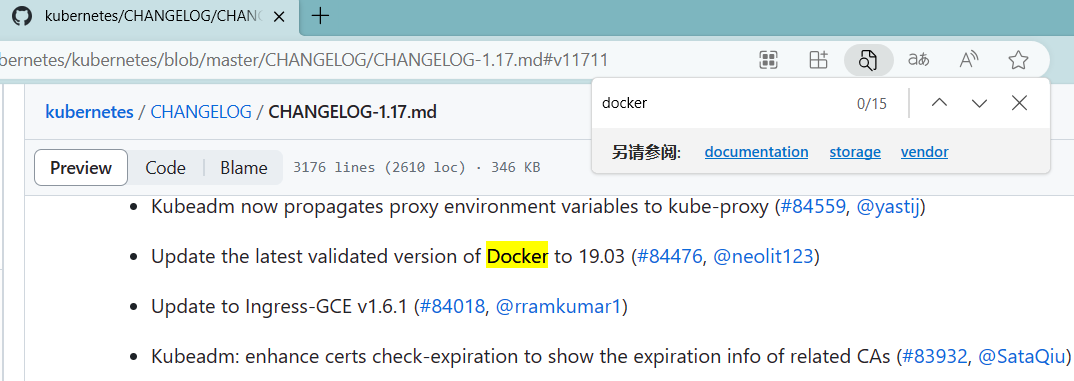

Release v1.17.11 · kubernetes/kubernetes (github.com)

所有节点(这里专指 master 和 node)安装 docker

注意: 生产环境要选择 k8s 指定的版本,具体查阅对应版本的 CHANGELOG

kubernetes/CHANGELOG/CHANGELOG-1.17.md at master · kubernetes/kubernetes (github.com)

安装指定版本的 docker

sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# 这里直接安装 19.03.9-3 版本的 docker

# yum list docker-ce --showduplicates | sort -r | grep 19.03

docker-ce.x86_64 3:19.03.9-3.el7 docker-ce-stable

# sudo yum install -y docker-ce-19.03.9 docker-ce-cli-19.03.9 containerd.io docker-compose-plugin

# docker version

Client: Docker Engine - Community

Version: 19.03.9

API version: 1.40

Go version: go1.13.10

Git commit: 9d988398e7

Built: Fri May 15 00:25:27 2020

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.9

# 由于 harbor 仓库未启用 https ,因此需要在 service 文件加上该参数

# dockerd --help |grep insec

--insecure-registry list Enable insecure registry communication

# grep insecure-registry /lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --insecure-registry harbor.example.com

# systemctl enable --now docker

2.6 所有节点安装集群初始化工具

2.6.1 配置国内镜像源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.6.2 安装初始化工具

注意:kubectl 是客户端命令,因此在 node 可以选择性安装

yum install -y kubelet-1.17.11 kubectl-1.17.11 kubeadm-1.17.11

systemctl enable --now kubelet

# 配置 kubeadm 子命令自动补全

mkdir ~/.kube/

kubeadm completion bash > ~/.kube/kubeadm_completion.sh

source ~/.kube/kubeadm_completion.sh

2.6.3 kubeadm 命令的使用

查看帮助

kubeadm --help

查看版本

[root@master-01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.11", GitCommit:"ea5f00d93211b7c80247bf607cfa422ad6fb5347", GitTreeState:"clean", BuildDate:"2020-08-13T15:17:52Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"}

查看部署指定版本的集群所需要的镜像

[root@master-01 ~]# kubeadm config images list --kubernetes-version v1.17.11

W0930 17:59:27.921963 6797 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0930 17:59:27.922001 6797 validation.go:28] Cannot validate kubelet config - no validator is available

k8s.gcr.io/kube-apiserver:v1.17.11

k8s.gcr.io/kube-controller-manager:v1.17.11

k8s.gcr.io/kube-scheduler:v1.17.11

k8s.gcr.io/kube-proxy:v1.17.11

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.5

由于是国外的镜像,由于网络原因,大概率是下载不成功的。因此将镜像地址换成阿里的,然后手动 pull

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.11

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.11

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.11

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.11

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

2.7 k8s 单节点部署

2.7.1 开始部署

首先给 master-01 添加一个快照,名为 init,方便后续回退

这里选择 master-01 进行演示

[root@master-01 ~]# kubeadm init --apiserver-advertise-address=10.0.0.11 --apiserver-bind-port=6443 --kubernetes-version=v1.17.11 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=example.local --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers # --v=6 如果卡住或者超时可以加该选项来查看详细报错信息

安装结果打印在控制台,为方便后续添加 node 节点到集群等操作,最好将打印结果进行保存

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

# 执行以下三行命令,可通过 kubectl 命令操作集群

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

# node 节点运行该命令可加入集群

kubeadm join 10.0.0.11:6443 --token s14pux.a81q4ai54mpumsz4 \

--discovery-token-ca-cert-hash sha256:5ab171bccb95245c209ddcbb614ae943b31b05e3a4ce2eb47d349655955c185f

2.7.2 验证结果

[root@master-01 ~]# kubectl get pod

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@master-01 ~]#

[root@master-01 ~]# mkdir -p $HOME/.kube

[root@master-01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master-01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master-01 ~]# kubectl get pod

No resources found in default namespace.

2.7.3 部署网络组件

k8s 支持的网络组件:安装扩展(Addon) | Kubernetes

这里选择部署 flannel-io/flannel: flannel is a network fabric for containers, designed for Kubernetes (github.com)

下载 yaml 文件并修改 network 为集群初始化时规划的 pod 网段 10.100.0.0/16

[root@master-01 ~]# wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

[root@master-01 ~]# grep -m 1 Network kube-flannel.yml

"Network": "10.100.0.0/16",

开始部署

[root@master-01 ~]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

serviceaccount/flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

# 成功部署

[root@master-01 ~]# kubectl get pod -A | grep flannel

kube-flannel kube-flannel-ds-zvcp7 1/1 Running 0 2m9s

去除 master-01 的污点

# 默认情况下 master 被打上了污点,不会被调度

[root@master-01 ~]# kubectl describe node master-01.example.local | grep Taints

Taints: node-role.kubernetes.io/master:NoSchedule

# 去除 master-01 的污点

[root@master-01 ~]# kubectl taint nodes master-01.example.local node-role.kubernetes.io/master:NoSchedule-

node/master-01.example.local untainted

部署 busybox 验证 pod 网络

[root@master-01 ~]# kubectl run my-container --image=busybox --command -- bash

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

[root@master-01 ~]# kubectl run my-container --image=busybox --command -- ping www.baidu.com

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/my-container created

[root@master-01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

my-container-84ff747745-sjd97 1/1 Running 0 10s

# 网络正常

[root@master-01 ~]# kubectl exec -it my-container-84ff747745-sjd97 -- sh

/ # ping www.baidu.com -c 3

PING www.baidu.com (14.119.104.189): 56 data bytes

64 bytes from 14.119.104.189: seq=0 ttl=127 time=9.193 ms

64 bytes from 14.119.104.189: seq=1 ttl=127 time=9.475 ms

64 bytes from 14.119.104.189: seq=2 ttl=127 time=9.668 ms

2.8 k8s 多节点部署(高可用)

这里将用到所有三个 master 节点进行演示,由于 mater-01 部署了单节点,首先将其还原到快照 init (没有快照的话,可以参考 kubeadm reset )

2.8.1 开始部署

相比单节点部署而言,多了一个选项 --control-plane-endpoint=10.0.0.188 指定了高可用反向代理的 VIP,这里还是选择 master-01 创建集群(任选一个 master 节点均可)

如果负载均衡器未配置正确,会有以下报错:

Oct 1 14:06:58 master-01 kubelet: E1001 14:06:58.218726 2977 reflector.go:153] k8s.io/client-go/informers/factory.go:135: Failed to lisriver: Get https://load-balancer.example.com:6443/apis/storage.k8s.io/v1beta1/csidrivers?limit=500&resourceVersion=0: EOF

[root@master-01 ~]# echo 10.0.0.188 load-balancer.example.com >> /etc/hosts

[root@master-01 ~]# kubeadm init --apiserver-advertise-address=10.0.0.11 --control-plane-endpoint=load-balancer.example.com --apiserver-bind-port=6443 --kubernetes-version=v1.17.11 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=example.local --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

# 添加 master 节点到集群

kubeadm join load-balancer.example.com:6443 --token iata77.l5yqheznrbz3i0jm \

--discovery-token-ca-cert-hash sha256:9cf6a8410183e55ff71f0f64f0aea35f3a1498e3e0e6311cf0571a3a2021931a \

--control-plane # 需要手动生成

Then you can join any number of worker nodes by running the following on each as root:

# 添加 node 节点到集群

kubeadm join load-balancer.example.com:6443 --token iata77.l5yqheznrbz3i0jm \

--discovery-token-ca-cert-hash sha256:9cf6a8410183e55ff71f0f64f0aea35f3a1498e3e0e6311cf0571a3a2021931a

# 准备集群认证文件

[root@master-01 ~]# mkdir -p $HOME/.kube

[root@master-01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master-01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 成功获取集群信息

[root@master-01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master-01.example.local Ready master 91m v1.17.11

# 部署 flannel, 参考 2.7.3

[root@master-01 ~]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

serviceaccount/flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@master-01 ~]# kubectl get pod -A |grep flannel

kube-flannel kube-flannel-ds-97c97 1/1 Running 0 94s

补充: 除了使用命令的方式,还可以基于 yaml 文件进行集群的初始化(这里仅给出关键命令)

# 生成初始化配置

# kubeadm config print init-defaults > cluster-init.yaml

# 根据需要修改配置文件

# cat cluster-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master-01.example.local

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.17.0

networking:

dnsDomain: cluster.local

podSubnet: 10.100.0.0/16

serviceSubnet: 10.200.0.0/16

scheduler: {}

# 创建集群

kubeadm init --config cluster-init.yaml

2.8.2 添加 master 节点

生成 --control-plane 参数所需 key

[root@master-01 ~]# kubeadm init phase upload-certs --upload-certs

I1001 16:10:41.762369 58794 version.go:251] remote version is much newer: v1.28.2; falling back to: stable-1.17

W1001 16:10:46.609771 58794 validation.go:28] Cannot validate kube-proxy config - no validator is available

W1001 16:10:46.609786 58794 validation.go:28] Cannot validate kubelet config - no validator is available

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

c67c9859d32c5f7e1fd75cfca8569aac32ae4f9344fcd6b8d53ad9b998362e05 # 这就是需要的 key

分别执行以下命令,将 master-02 和 master-03 加入 control-plane

# echo 10.0.0.188 load-balancer.example.com >> /etc/hosts

# kubeadm join load-balancer.example.com:6443 --token iata77.l5yqheznrbz3i0jm \

--discovery-token-ca-cert-hash sha256:9cf6a8410183e55ff71f0f64f0aea35f3a1498e3e0e6311cf0571a3a2021931a \

--control-plane --certificate-key c67c9859d32c5f7e1fd75cfca8569aac32ae4f9344fcd6b8d53ad9b998362e05

...

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

# 成功加入节点

[root@master-02 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-01.example.local Ready master 132m v1.17.11

master-02.example.local Ready master 8m25s v1.17.11

master-03.example.local Ready master 14s v1.17.11

2.8.3 添加 node 节点

分别各个 node 节点执行以下命令

echo 10.0.0.188 load-balancer.example.com >> /etc/hosts

kubeadm join load-balancer.example.com:6443 --token iata77.l5yqheznrbz3i0jm \

--discovery-token-ca-cert-hash sha256:9cf6a8410183e55ff71f0f64f0aea35f3a1498e3e0e6311cf0571a3a2021931a

添加完成后的结果

[root@master-02 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-01.example.local Ready master 176m v1.17.11

master-02.example.local Ready master 52m v1.17.11

master-03.example.local Ready master 44m v1.17.11

node-01.example.local Ready <none> 95s v1.17.11

node-02.example.local Ready <none> 34m v1.17.11

node-03.example.local Ready <none> 74s v1.17.11

2.8.4 查看集群证书

[root@master-02 ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-4fsl6 47m system:bootstrap:tdi58m Approved,Issued

csr-hc9g7 4m system:bootstrap:tdi58m Approved,Issued

csr-jh7gj 55m system:bootstrap:tdi58m Approved,Issued

csr-k8psl 3m39s system:bootstrap:tdi58m Approved,Issued

csr-qcjln 36m system:bootstrap:tdi58m Approved,Issued

csr-vvtfq 2m53s system:bootstrap:tdi58m Approved,Issued

2.8.5 创建 pod 验证集群网络

[root@master-01 ~]# kubectl run net-test --image=alpine sleep 3600

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/net-test created

[root@master-01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test-88ff4d957-rnrsk 1/1 Running 0 21s 10.100.4.2 node-01.example.local <none> <none>

[root@master-01 ~]# kubectl exec -it net-test-88ff4d957-rnrsk -- sh

/ # ping www.baidu.com

PING www.baidu.com (14.119.104.189): 56 data bytes

64 bytes from 14.119.104.189: seq=0 ttl=127 time=8.608 ms

64 bytes from 14.119.104.189: seq=1 ttl=127 time=9.249 ms

64 bytes from 14.119.104.189: seq=2 ttl=127 time=9.576 ms

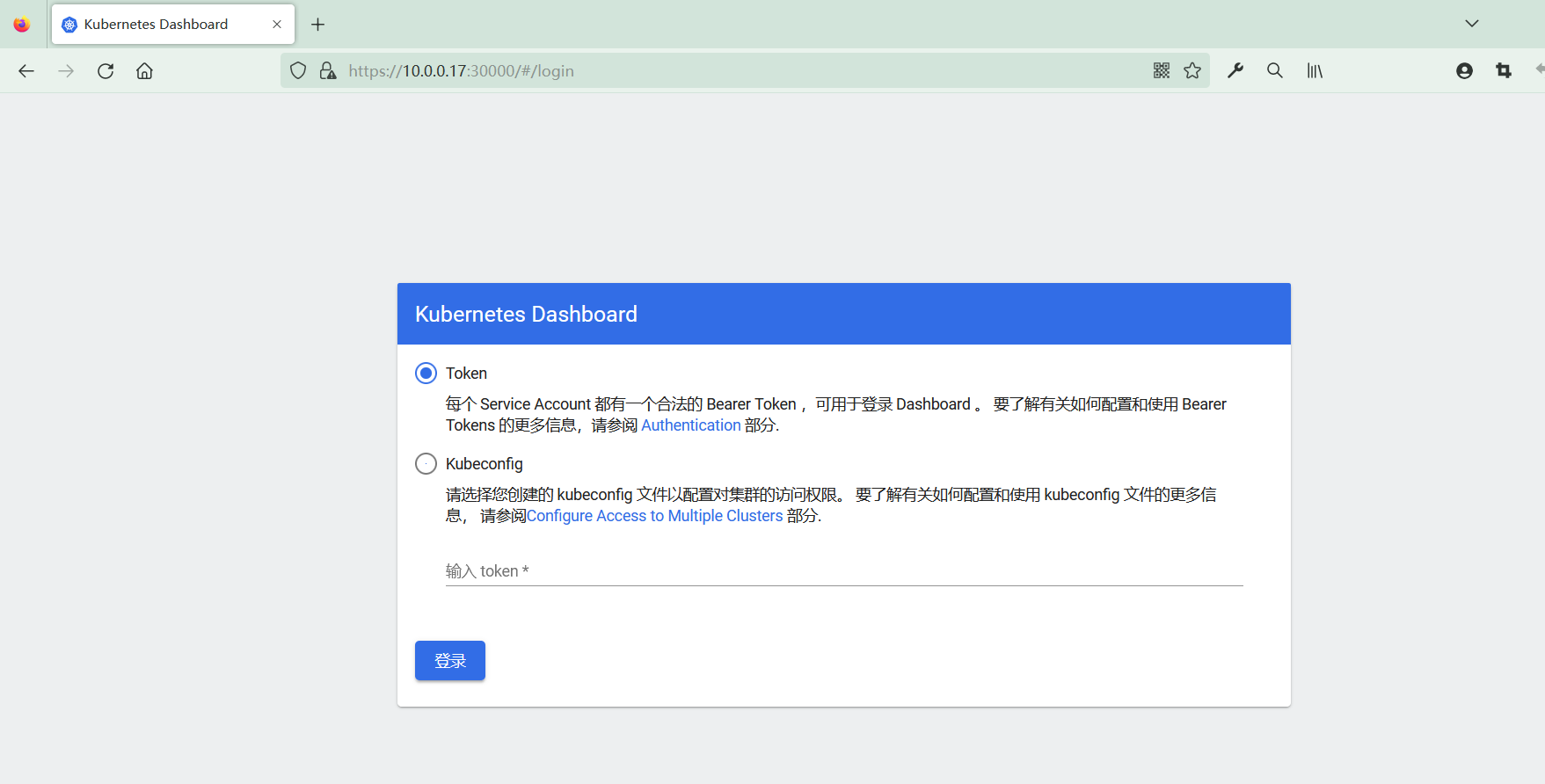

三、部署 Dashboard

3.1 准备配置文件

[root@master-01 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml

[root@master-01 ~]# mv recommended.yaml dashboard.yaml

# 默认的是以 ClusterIP 发布的,只能集群内访问。这里将其改为 NodePort 的方式部署

[root@master-01 ~]# grep NodePort -C 10 dashboard.yaml

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort # 新增

ports:

- port: 443

targetPort: 8443

nodePort: 30000 # 新增

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

3.2 部署并验证效果

[root@master-01 ~]# kubectl apply -f dashboard.yaml

# 查看 pod 和 service 服务状态

[root@master-01 ~]# kubectl get pod,svc -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/dashboard-metrics-scraper-894c58c65-tsqb7 1/1 Running 0 32m 10.100.4.4 node-01.example.local <none> <none>

pod/kubernetes-dashboard-fc4fc66cc-vvbht 1/1 Running 0 32m 10.100.4.3 node-01.example.local <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dashboard-metrics-scraper ClusterIP 10.200.205.233 <none> 8000/TCP 32m k8s-app=dashboard-metrics-scraper

service/kubernetes-dashboard NodePort 10.200.198.74 <none> 443:30000/TCP 32m k8s-app=kubernetes-dashboard

# 节点监听了 30000 端口

[root@node-01 ~]# ss -ntl |grep 30000

LISTEN 0 32768 [::]:30000 [::]:*

由于是 https 协议,但是证书是私有的,使用 chrome 或 edge 浏览器无法访问 dashboard。因此选择 firefox 浏览器进行访问

访问 dashboard 需要使用 token 或者 kubeconfig 文件进行认证,这里选择 token 进行验证。下面开始生成 token

# 创建账号

[root@master-01 ~]# kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

serviceaccount/dashboard-admin created

# 授权

[root@master-01 ~]# kubectl create clusterrolebinding dashboard-admin-rb --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin

# 获取账号 token

[root@master-01 ~]# kubectl get secrets -n kubernetes-dashboard | grep dashboard-admin

dashboard-admin-token-wr4jl kubernetes.io/service-account-token 3 15s

[root@master-01 ~]# kubectl describe secrets dashboard-admin-token-wr4jl -n kubernetes-dashboard

Name: dashboard-admin-token-wr4jl

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 66e3f61f-9172-400c-b454-d9fb1a93be4c

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IndQay1pc3ctc3RyWDh3dXQtTHR1N09PLVVBdUExdXJkRVRQeFpmbWlmQ1kifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tN3NweDkiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMjQ5OTJlMzctNmI1Yi00ODZmLTkwYmUtYmQxYTU2NGM0MmJhIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.jXYf6lQQBW9kb3KUYpoAi57JbBFwMkUdx3Gb0jK4sN8E80WIM6lyGLktsTCmNoS21BN-bGyusqT5nNJAPhrVYaEjF6pSSLrd49LHF0Wetv04Jh5fdw-aHYuR15QKCZgGjedzuUHD4F8-6Ba5ZqMh67JjKI3Dhb-dIuBIVN3KLi2_D62F4VoCryZB3ExVfdRqZmQmI0EJ5XursMgCzL9v9VCPw9FatL604n5658CuXQNXc6uIpAVwuf3G_4uBoRQ-LcyPXov9JIoSv-qbxnotgZ2xXcHWZ7HIxa1pCRL8YG_bx03ZUE04GebjF9yNeki9Dxsp4gIxDcr09ByzO0gmgg

将上述 token 填入输入框(不要带多余空格),成功访问 dashboard

四、k8s 部署 nginx + tomcat

目标:实现动静分离的效果,这里仅演示简单的实现效果

4.1 部署 nginx

[root@master-01 nginx]# pwd

/root/yaml/nginx

[root@master-01 nginx]# cat nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.18.0

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-service-labels

name: nginx-service

namespace: default

spec:

type: NodePort

ports:

- name: http-nginx

port: 80

protocol: TCP

targetPort: 80

nodeport: 30004

selector:

app: nginx

[root@master-01 nginx]# kubectl apply -f nginx.yml

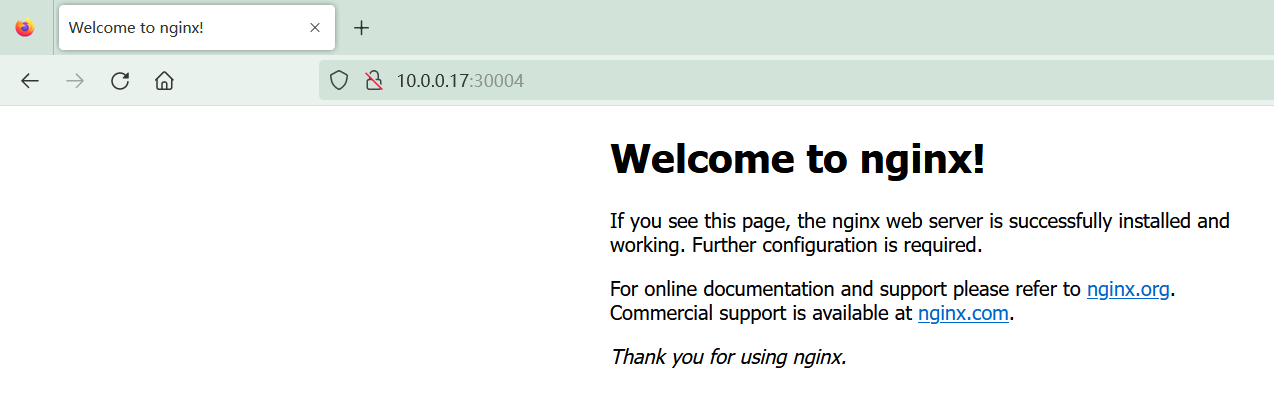

查看部署结果

[root@master-01 nginx]# kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-deployment-d44c4d8f4-bftm4 1/1 Running 0 4m11s 10.100.3.3 node-01.example.local <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 162m <none>

service/nginx-service NodePort 10.200.231.164 <none> 80:30004/TCP 4m11s app=nginx

根据以上结果可知,nginx 成功部署,且 pod 被调度到了 node-01 节点

4.2 部署 tomcat

[root@master-01 tomcat]# pwd

/root/yaml/tomcat

[root@master-01 tomcat]# cat tomcat.yml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: tomcat-deployment

labels:

apps: tomcat

spec:

replicas: 1

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: tomcat

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

labels:

app: tomcat-service-label

name: tomcat-service

namespace:

spec:

type: NodePort

ports:

- name: tomcat-http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30005

selector:

app: tomcat

[root@master-01 tomcat]# kubectl apply -f tomcat.yml

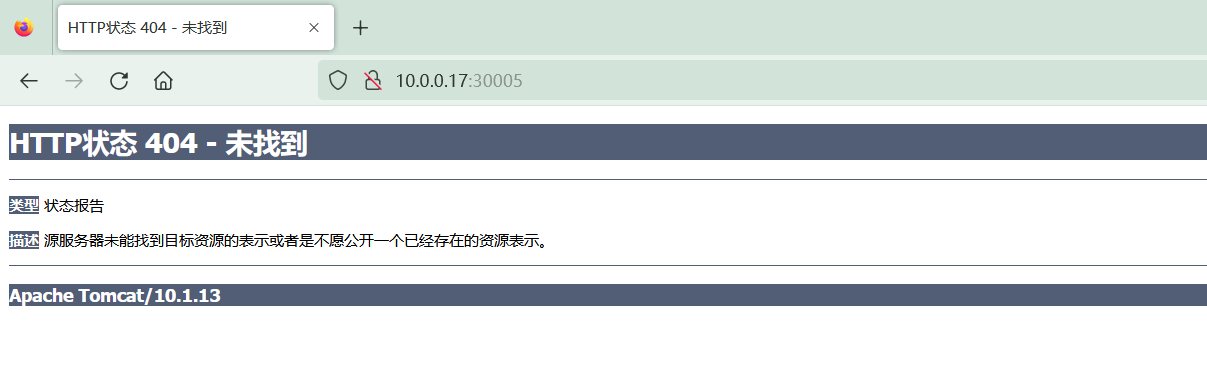

查看部署结果

[root@master-01 ~]# kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-deployment-d44c4d8f4-bftm4 1/1 Running 0 4m11s 10.100.3.3 node-01.example.local <none> <none>

pod/tomcat-deployment-78c89857d6-9qtr9 1/1 Running 0 4m14s 10.100.3.2 node-01.example.local <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 162m <none>

service/nginx-service NodePort 10.200.231.164 <none> 80:30004/TCP 4m11s app=nginx

service/tomcat-service NodePort 10.200.206.119 <none> 80:30005/TCP 4m14s app=tomcat

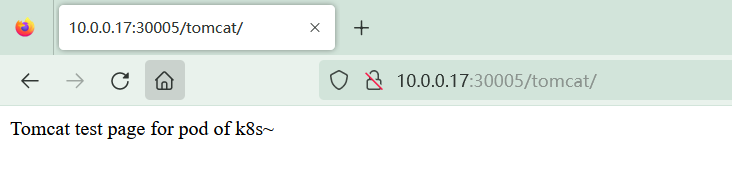

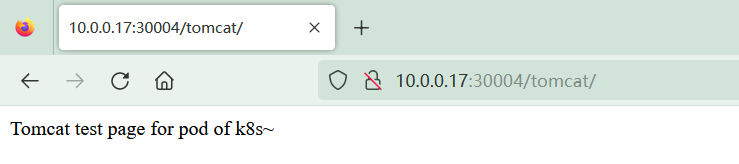

生成一个tomcat 临时页面

[root@master-01 yaml]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-d44c4d8f4-bftm4 1/1 Running 0 6m18s

tomcat-deployment-78c89857d6-9qtr9 1/1 Running 0 6m21s

[root@master-01 yaml]#

[root@master-01 yaml]# kubectl exec -it tomcat-deployment-78c89857d6-9qtr9 bash

root@tomcat-deployment-78c89857d6-9qtr9:/usr/local/tomcat# cd webapps

root@tomcat-deployment-78c89857d6-9qtr9:/usr/local/tomcat/webapps# mkdir tomcat

root@tomcat-deployment-78c89857d6-9qtr9:/usr/local/tomcat/webapps# echo "Tomcat test page for pod of k8s~" > tomcat/index.html

root@tomcat-deployment-78c89857d6-9qtr9:/usr/local/tomcat/webapps# exit

exit

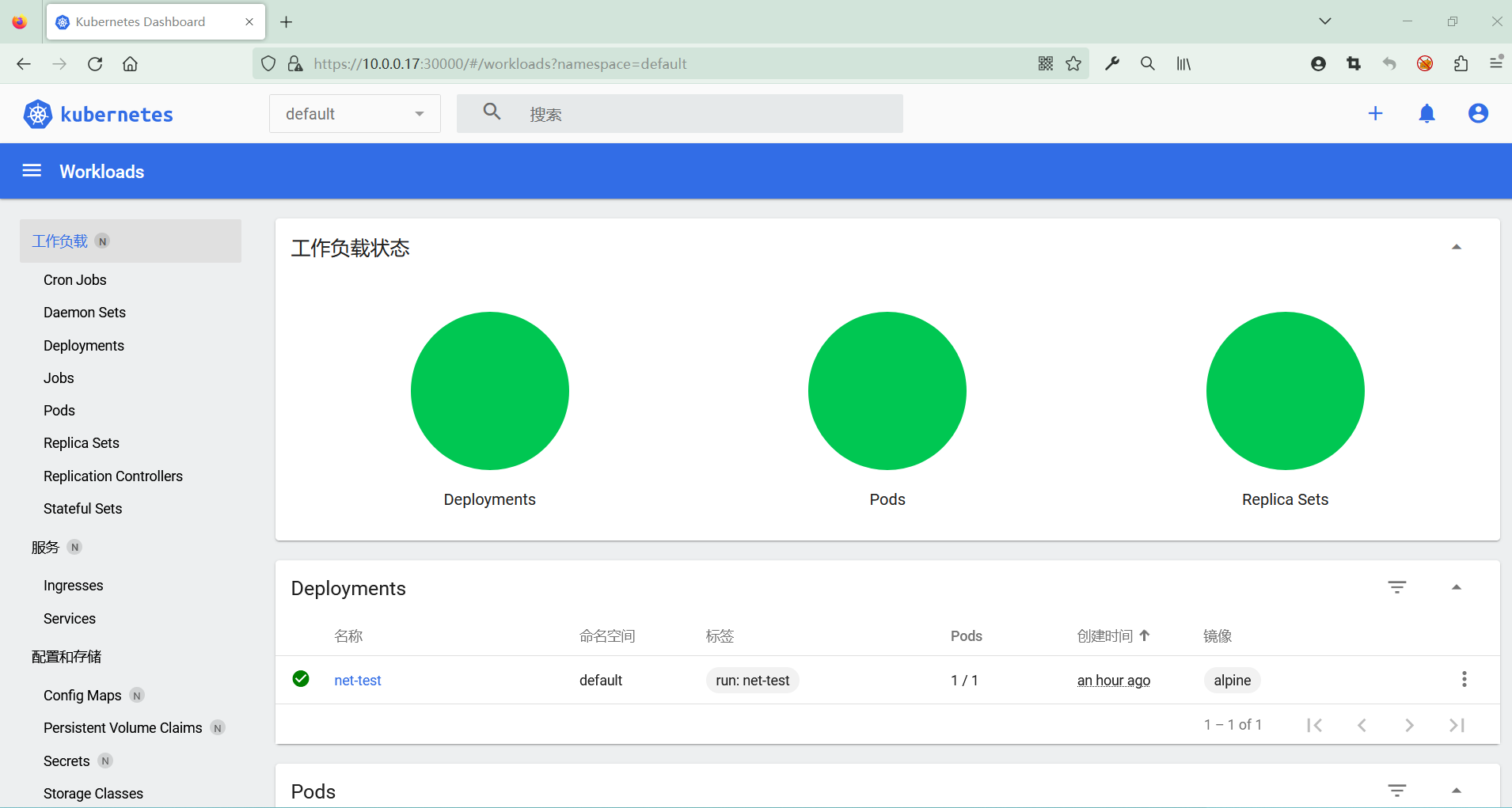

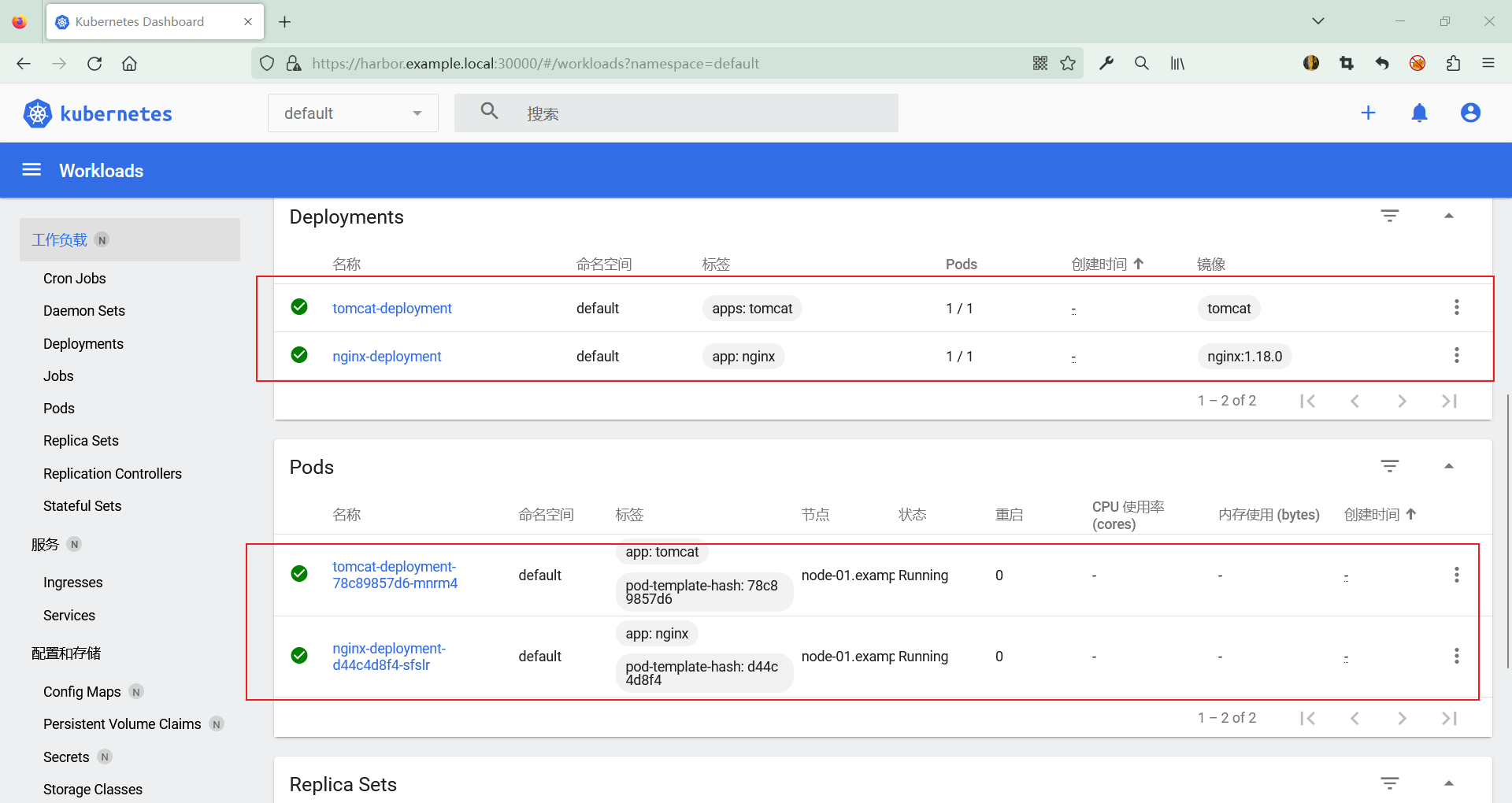

4.3 从 dashboard 查看结果

4.4 配置 nginx 实现动静分离

[[root@master-01 ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/net-test-88ff4d957-krc9v 1/1 Running 0 44m

pod/nginx-deployment-d44c4d8f4-bftm4 1/1 Running 0 70m

pod/tomcat-deployment-78c89857d6-9qtr9 1/1 Running 0 70m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 3h49m

service/nginx-service NodePort 10.200.231.164 <none> 80:30004/TCP 70m

service/tomcat-service NodePort 10.200.206.119 <none> 80:30005/TCP 70m

[root@master-01 ~]# kubectl exec -it nginx-deployment-d44c4d8f4-bftm4 bash

root@nginx-deployment-d44c4d8f4-bftm4:/#

root@nginx-deployment-d44c4d8f4-sfslr:/# cat > /etc/nginx/conf.d/default.conf << EOF

server {

listen 80;

listen [::]:80;

server_name localhost;

location /tomcat {

proxy_pass http://tomcat-service.default.svc.example.local;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

EOF

root@nginx-deployment-d44c4d8f4-bftm4:/# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

root@nginx-deployment-d44c4d8f4-bftm4:/# nginx -s reload

2023/10/02 11:44:15 [notice] 997#997: signal process started

五、k8s 集群管理

5.1 token 管理

[root@master-01 ~]# kubeadm # 双击 tab 补全得到的结果

alpha completion config init join reset token upgrade version

[root@master-01 ~]# kubeadm token

create delete generate list

5.2 reset 命令

在初始化集群时,如果生成了错误的配置,可以用该命令重置环境

[root@master-01 ~]# kubeadm reset

kubeadm reset 命令用于将Kubernetes节点(通常是工作节点)恢复到其初始状态,将节点从Kubernetes集群中分离并清理集群相关的配置和数据。这个命令的主要作用包括:

节点的脱离集群:

kubeadm reset会将节点从Kubernetes集群中分离。这包括删除节点的证书、从集群中删除节点的信息,并且节点将不再参与集群中的通信和管理。清理配置文件:它会删除Kubernetes的配置文件,例如kubeconfig文件,以及CNI(容器网络接口)插件的配置,以确保不再影响Kubernetes集群。

清理数据:

kubeadm reset还会清理节点上的Kubernetes数据,包括删除容器、卷、数据存储等,以确保节点不再包含与Kubernetes集群相关的残留数据。

这个命令通常在以下情况下使用:

当你需要卸载或移除节点上的Kubernetes时,可以使用

kubeadm reset来清理节点,然后重新配置或重新部署Kubernetes。在测试环境中,当你需要重置一个节点以进行新的Kubernetes集群配置时,可以使用

kubeadm reset。

请注意,kubeadm reset 只应该用于节点级别的操作,并且在生产环境中使用时需要谨慎,因为它会删除节点上的Kubernetes相关数据。如果你需要从整个Kubernetes集群中移除节点,请首先使用 kubectl drain 命令将节点上的工作负载迁移到其他节点,然后再使用 kubeadm reset 进行节点的重置和卸载。

5.3 查看证书有效期

[root@master-01 ~]# kubeadm alpha

certs kubeconfig kubelet selfhosting

[root@master-01 ~]# kubeadm alpha certs

certificate-key check-expiration renew

# 检查证书是否过期,可以发现 kubeadm 部署的集群,证书有效期为 365d(一年)

[root@master-01 ~]# kubeadm alpha certs check-expiration

[check-expiration] Reading configuration from the cluster...

[check-expiration] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

CERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGED

admin.conf Oct 01, 2024 07:58 UTC 364d no

apiserver Oct 01, 2024 07:58 UTC 364d ca no

apiserver-etcd-client Oct 01, 2024 07:58 UTC 364d etcd-ca no

apiserver-kubelet-client Oct 01, 2024 07:58 UTC 364d ca no

controller-manager.conf Oct 01, 2024 07:58 UTC 364d no

etcd-healthcheck-client Oct 01, 2024 07:58 UTC 364d etcd-ca no

etcd-peer Oct 01, 2024 07:58 UTC 364d etcd-ca no

etcd-server Oct 01, 2024 07:58 UTC 364d etcd-ca no

front-proxy-client Oct 01, 2024 07:58 UTC 364d front-proxy-ca no

scheduler.conf Oct 01, 2024 07:58 UTC 364d no

CERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

ca Sep 29, 2033 07:58 UTC 9y no

etcd-ca Sep 29, 2033 07:58 UTC 9y no

front-proxy-ca Sep 29, 2033 07:58 UTC 9y no

5.4 更新证书有效期

参考链接:

https://www.qikqiak.com/post/update-k8s-10y-expire-certs/

https://www.chenshaowen.com/blog/how-to-renew-kubernetes-certs-manually.html

[root@master-01 ~]# kubeadm alpha certs renew

admin.conf apiserver-etcd-client etcd-healthcheck-client front-proxy-client

all apiserver-kubelet-client etcd-peer scheduler.conf

apiserver controller-manager.conf etcd-server

# 更新所有证书

[root@master-01 ~]# kubeadm alpha certs renew all

完成后重启 kube-apiserver、kube-controller、kube-scheduler 这 3个容器即可,我们可以查看 apiserver 的证书的有效期来验证是否更新成功:

[root@master-01 ~]# docker ps |egrep "k8s_kube-apiserver|k8s_kube-scheduler|k8s_kube-controller"|awk '{print $1}'|xargs docker restart

c2d987734f5a

043af8733130

5a87240ba97a

[root@master-01 ~]# echo | openssl s_client -showcerts -connect 127.0.0.1:6443 -servername api 2>/dev/null | openssl x509 -noout -enddate

notAfter=Oct 1 13:17:56 2024 GMT

六、k8s 集群升级

kubeadm 部署的集群需要用 kubeadm 来升级,首先需要将 kubeadm 升级到目标版本,然后再继续其他操作。

6.1 升级准备

本次升级,目标版本:v1.19.2

查看当前版本

[root@master-01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.11", GitCommit:"ea5f00d93211b7c80247bf607cfa422ad6fb5347", GitTreeState:"clean", BuildDate:"2020-08-13T15:17:52Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"}

# 查看 yum 源是否有目标版本

[root@master-01 ~]# yum list kubectl kubeadm kubelet --showduplicates | grep 1.19.2

kubeadm.x86_64 1.19.2-0 kubernetes

kubectl.x86_64 1.19.2-0 kubernetes

kubelet.x86_64 1.19.2-0 kubernetes

6.2 升级 master 节点

滚动式升级 master 节点

# 安装部署工具

yum install -y kubelet-1.19.2 kubectl-1.19.2 kubeadm-1.19.2

[root@master-01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.2", GitCommit:"f5743093fd1c663cb0cbc89748f730662345d44d", GitTreeState:"clean", BuildDate:"2020-09-16T13:38:53Z", GoVersion:"go1.15", Compiler:"gc", Platform:"linux/amd64"}

查看版本升级计划

[root@master-01 ~]# kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade/config] FATAL: this version of kubeadm only supports deploying clusters with the control plane version >= 1.18.0. Current version: v1.17.11 # 版本低于 1.18.0,kubeadm 不支持升级操作

To see the stack trace of this error execute with --v=5 or higher

如果选择的版本符合 kubeadm 的要求,则继续以下操作

[root@master-01 ~]# kubeadm upgrade apply v1.19.2

升级完成后,可以查看镜像版本

# docker images

6.3 升级 node 节点

同样是滚动升级的方式进行

# 安装部署工具

yum install -y kubelet-1.19.2 kubectl-1.19.2 kubeadm-1.19.2

# 执行升级操作

kubeadm upgrade node --kubelet-version 1.19.2